If you ever published any new post on to your blog / website, and are using rss feed, your website adds newly published post to rss xml based on date and time of publishing and this same URL remains forever with the same timestamp. Now, if you are using automated feed burners or email services or social media tools which takes this rss xml file and sends the contents to intended audience, your post will reach to your followers only once when its actually published.

But, isn’t it would be great for us to have some kind of tool which automatically scan’s URL of your website, picks one randomly and emails it to your followers or update to your social media platform. Following description does exacly the same by taking benefit of tools like Google RSS Feed Subscriber, to generate rss xml using predefined set of URL’s already copied into one text file and taking only few of the URL’s by shuffling and creating final rss compatible xml with latest timestamp, so automated tools will consider this xml as if your post has been published recently.

$ vim generate_xml.shWEBSITE_URL_FILE=only_uniq_urls.txt

SHUFFLED_URL_FILE=shuffled_urls.txt

shuf -n 3 $WEBSITE_URL_FILE > $SHUFFLED_URL_FILE

echo "<?xml version=\"1.0\" encoding=\"UTF-8\" ? >

<rss version=\"2.0\">

<channel>

<title>Lynxbee</title>

<link>https://lynxbee.com</link>

<description>Linux, Android and Opensource software knowledge platform</description>" > custom-rss.xml

while read line

do

WEBSITE_URL=$line

TZ=`date +"%z"`

TIME=`date +"%H:%M:%S"`

WEEKDAY=`date +"%a"`

DATE=`date +"%d"`

MONTH=`date +"%b"`

YEAR=`date +"%Y"`

FINAL="$WEEKDAY, $DATE $MONTH $YEAR $TIME $TZ"

echo "<item>" >> custom-rss.xml

echo "<title> Lynxbee $TIME </title>" >> custom-rss.xml

echo "<link>$line</link>" >> custom-rss.xml

echo "<pubDate>FINAL</pubDate>" >> custom-rss.xml

echo "<description> Linux, Android and Opensource software $TIME </description>" >> custom-rss.xml

echo "</item>" >> custom-rss.xml

sleep 1

done < $SHUFFLED_URL_FILE

echo "</channel>

</rss>" >> custom-rss.xmlNow, create only_uniq_urls.txt file which actually contents list of total URL’s of your website, from subset of which you will create a rss xml.

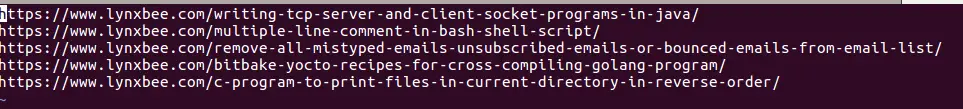

$ vim only_uniq_urls.txt

Now, lets generate the xml by running, generate_xml.sh script as,

$ bash generate_xml.shOnce, you run above script, the line “shuf -n 3 $WEBSITE_URL_FILE > $SHUFFLED_URL_FILE” creates another text file shuffled_urls.txt with only 3 URLs shuffled from only_uniq_urls.txt text file and script goes on reading shuffled_urls.txt and creates an rss compatible custom-rss.xml XML file as below,

<?xml version="1.0" encoding="UTF-8" ?>

<rss version="2.0">

<channel>

<title>Lynxbee</title>

<link>https://lynxbee.com</link>

<description>Linux, Android and Opensource software knowledge platform</description>

<item>

<title> Lynxbee 21:19:34 </title>

<link>https://lynxbee.com/c-program-to-print-files-in-current-directory-in-reverse-order/</link>

<pubDate>Wed, 27 Feb 2019 21:19:34 +0530</pubDate>

<description> Linux, Android and Opensource software 21:19:34 </description>

</item>

<item>

<title> Lynxbee 21:19:35 </title>

<link>https://lynxbee.com/bitbake-yocto-recipes-for-cross-compiling-golang-program/</link>

<pubDate>Wed, 27 Feb 2019 21:19:35 +0530</pubDate>

<description> Linux, Android and Opensource software 21:19:35 </description>

</item>

<item>

<title> Lynxbee 21:19:36 </title>

<link>https://lynxbee.com/remove-all-mistyped-emails-unsubscribed-emails-or-bounced-emails-from-email-list/</link>

<pubDate>Wed, 27 Feb 2019 21:19:36 +0530</pubDate>

<description> Linux, Android and Opensource software 21:19:36 </description>

</item>

</channel>

</rss>